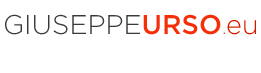

In this post I show how to configure a Mysql Cluster with a load balancer pfSense, the open-source firewall based on the FreeBSD distro. At first I install the Mysql Cluster instance (multi-master configuration), then the pfSense TCP load balancer.

MySQL Cluster consists of three different components:

1. Management Node (mgm): for the configuration and monitoring of the cluster

2. Data Node (dn): the data store

3. SQL Node (mysqld): the mysqld daemon that connects to all of the data nodes in order to perform data storage and retrieval

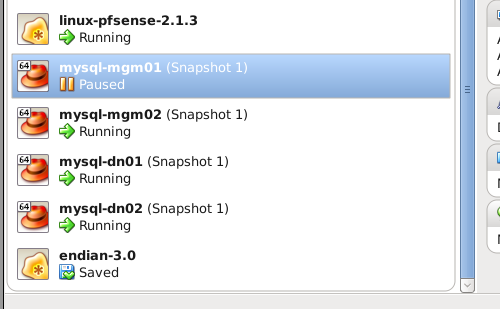

In this guide, I use the same server for the mgm and mysqld components and a second server for the data node. All Mysql servers will be replicated for a minimal High Availability configuration. For the pfSense instance I use a single server (you can configure an optional second server for failover). I create the Balanced Mysql Cluster infrastructure using the Virtualbox hypervisor with a total of 5 virtual machines, see the following image.

VIRTUAL MACHINES

IP 10.10.10.1 – lb01 (load balancer pfSense)

IP 10.10.10.10 – mgm01 (mgm+mysqld)

IP 10.10.10.11 – mgm02 (mgm+mysqld)

IP 10.10.10.20 – dn01 (data node)

IP 10.10.10.21 – dn02 (data node)

APPLICATION STACK

– SO Linux CentOS 6.5 64bit Minimal

– VirtualBox 4.2.16

– Mysql Cluster Server gpl 7.3.5

– pfSense 2.1.3

ROADMAP

STEP 1. Preparing the environment

STEP 2. Setup Mysql Management nodes (mgm01, mgm02)

STEP 3. Setup Mysql Data nodes (dn01, dn02)

STEP 4. Setup Mysql Daemon nodes (mgm01, mgm02)

STEP 5. Setup pfSense Load Balancer

STEP 6. Test load balancer

STEP 1. Preparing the environment

For the operative system I perform two kind of installation: a) setup and post-conf of CentOS for the Mysql Cluster b) setup and post-conf of pfSense for the Load Balancer I create the first virtual machines named mgm01 with a minimal CentOS installation. Before I proceed, let’s set some important post-configurations.

$ vi /etc/selinux/config SELINUX=disabled $ chkconfig iptables off $ vi /etc/hosts 10.10.10.10 mgm01 10.10.10.11 mgm02 10.10.10.20 dn01 10.10.10.21 dn02

$ vi /etc/sysconfig/network-scripts/ifcfg-eth0 DEVICE=eth0 NM_CONTROLLED=yes ONBOOT=yes BOOTPROTO=static IPADDR=10.10.10.1 NETMASK=255.255.255.0 GATEWAY=10.10.10.1

$ yum install ntp $ chkconfig ntpd on $ ntpdate pool.ntp.org $ /etc/init.d/ntpd start $ yum install perl $ wget http://dev.mysql.com/get/Downloads/MySQL-Cluster-7.3/MySQL-Cluster-server-gpl-7.3.5-1.el6.x86_64.rpm

After the post-confs, I shutdown the mgm01 machine then I clone it. To create the Mysql Cluster architecture I need a total amount of 5 virtual machine: mgm01, mgm02, dn01, dn02. After cloning you must revisiting the network configurations. Here is a memo.

# Network interface $ vi /etc/sysconfig/network-scripts/ifcfg-eth0 # Hostname $ vi /etc/sysconfig/network

STEP 2. Setup Mysql Management nodes (mgm01, mgm02)

I continue with the Mysql Management Nodes in failover configuration. You must repeating these settings both on mgm01 and mgm02.

$ rpm -Uhv --force MySQL-Cluster-server-gpl-7.3.5-1.el6.x86_64.rpm mkdir /var/lib/mysql-cluster

$ vi /etc/my.cnf [mysqld] datadir=/var/lib/mysql socket=/var/lib/mysql/mysql.sock user=mysql symbolic-links=0 ndbcluster ndb-connectstring=mgm01,mgm02 default-storage-engine=ndbcluster [mysqld_safe] log-error=/var/log/mysqld.log pid-file=/var/run/mysqld/mysqld.pid [mysql_cluster] ndb-connectstring=mgm01,mgm02

$ vi /var/lib/mysql-cluster/config.ini [ndbd default] NoOfReplicas=2 DataMemory=80M IndexMemory=18M [ndb_mgmd] NodeId=1 HostName=mgm01 DataDir=/var/lib/mysql-cluster [ndb_mgmd] NodeId=2 HostName=mgm02 DataDir=/var/lib/mysql-cluster [ndbd] HostName=dn01 DataDir=/var/lib/mysql-cluster/data [ndbd] HostName=dn02 DataDir=/var/lib/mysql-cluster/data [mysqld] HostName=mgm01 [mysqld] HostName=mgm02

# Start mysql management (mgm01) $ ndb_mgmd --ndb-nodeid=1 -f /var/lib/mysql-cluster/config.ini # Start mysql management (mgm02) $ ndb_mgmd --ndb-nodeid=2 -f /var/lib/mysql-cluster/config.ini

STEP 3. Setup Mysql Data nodes (dn01, dn02)

I continue with the Data Nodes. You must repeating these settings both on dn01 and dn02.

$ rpm -Uhv --force MySQL-Cluster-server-gpl-7.3.5-1.el6.x86_64.rpm $ mkdir -p /var/lib/mysql-cluster/data

$ vi /etc/my.cnf [mysqld] datadir=/var/lib/mysql socket=/var/lib/mysql/mysql.sock user=mysql symbolic-links=0 ndbcluster ndb-connectstring=mgm01,mgm02 default-storage-engine=ndbcluster [mysqld_safe] log-error=/var/log/mysqld.log pid-file=/var/run/mysqld/mysqld.pid [mysql_cluster] ndb-connectstring=mgm01,mgm02

$ chkconfig --level 2345 mysqld off # Start mysql data $ ndbmtd

STEP 4. Setup Mysql Daemon nodes (mgm01, mgm02)

Finally I configure the mysqld daemon both on mgm01 and mgm02 machines. After the mysql startup, use the “mysql_secure_installation” command to complete the MySQL setup. If you want to have the synchronizing of grant privileges on every node, you must perform some SLQ commands. See below.

# Start mysql service /etc/init.d/mysqld start # Installation using default random /root/.mysql_secret $ mysql_secure_installation

#Setup mysql privileges synchronous replication $ mysql -uroot -proot < /usr/share/mysql/ndb_dist_priv.sql $ mysql -uroot -proot

> SELECT ROUTINE_NAME, ROUTINE_SCHEMA, ROUTINE_TYPE FROM INFORMATION_SCHEMA.ROUTINES WHERE ROUTINE_NAME LIKE 'mysql_cluster%' ORDER BY ROUTINE_TYPE;

> CALL mysql.mysql_cluster_move_privileges();

> SELECT CONCAT('Conversion ', IF(mysql.mysql_cluster_privileges_are_distributed(), 'succeeded', 'failed'), '.') AS Result;

> SELECT TABLE_NAME, ENGINE FROM INFORMATION_SCHEMA.TABLES WHERE TABLE_SCHEMA = 'mysql' AND TABLE_NAME LIKE '%backup' ORDER BY ENGINE;

> GRANT USAGE ON *.* to root@'%' IDENTIFIED BY 'root';

> FLUSH PRIVILEGES;

# To check mysql cluster status $ ndb_mgm ndb_mgm> show Cluster Configuration --------------------- [ndbd(NDB)] 2 node(s) id=3 @10.10.10.20 (mysql-5.6.17 ndb-7.3.5, Nodegroup: 0, *) id=4 @10.10.10.21 (mysql-5.6.17 ndb-7.3.5, Nodegroup: 0) [ndb_mgmd(MGM)] 2 node(s) id=1 @10.10.10.10 (mysql-5.6.17 ndb-7.3.5) id=2 @10.10.10.11 (mysql-5.6.17 ndb-7.3.5) [mysqld(API)] 2 node(s) id=5 @10.10.10.10 (mysql-5.6.17 ndb-7.3.5) id=6 @10.10.10.11 (mysql-5.6.17 ndb-7.3.5)

STEP 5. Setup pfSense Load Balancer

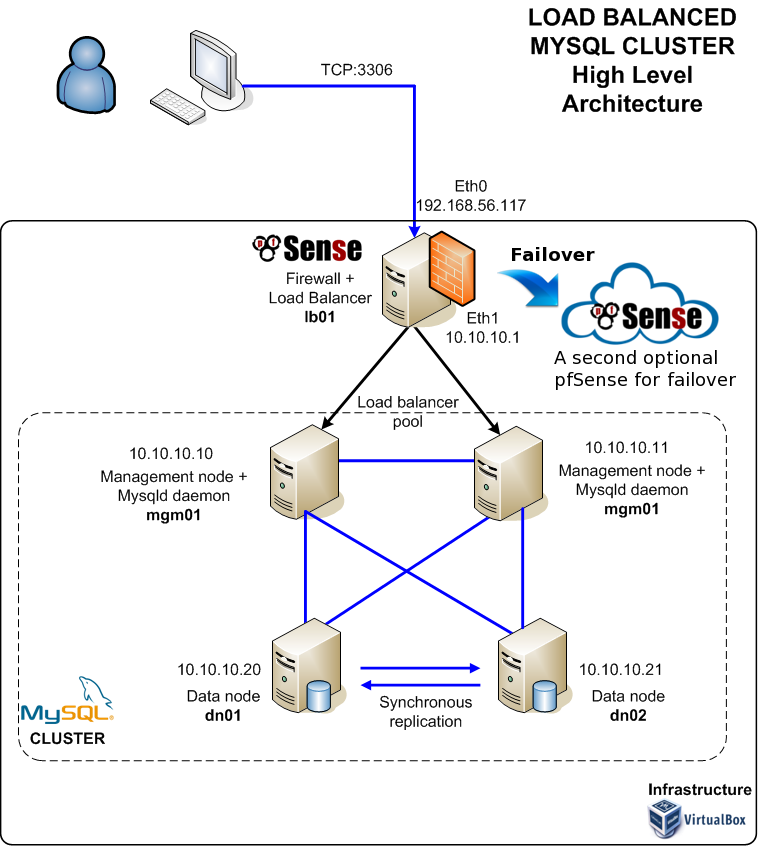

For the load balancer I create a pfSense new virtual machine with 2 network interfaces for WAN and LAN. The second NIC will be the defaut gateway for the mysql cluster network. Ensure that servers mgm01 and mgm02 have pfSense set as their gateway (10.10.10.1).

em0 --> WAN (Host Only) em1 --> LAN (IInternal Network) IP class for mysql cluster

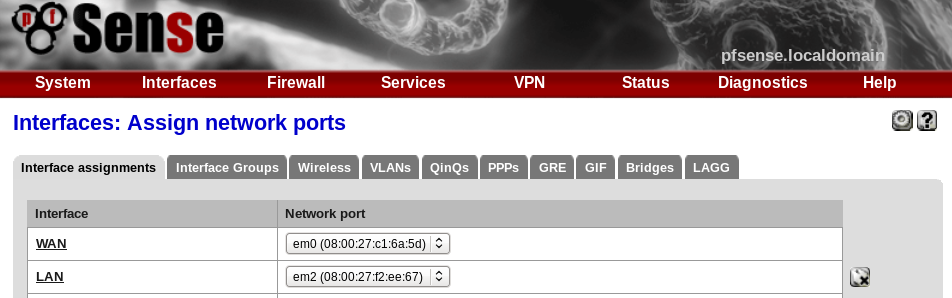

# Opening all inbound traffic Firewall > Rules > pass (WAN, LAN)

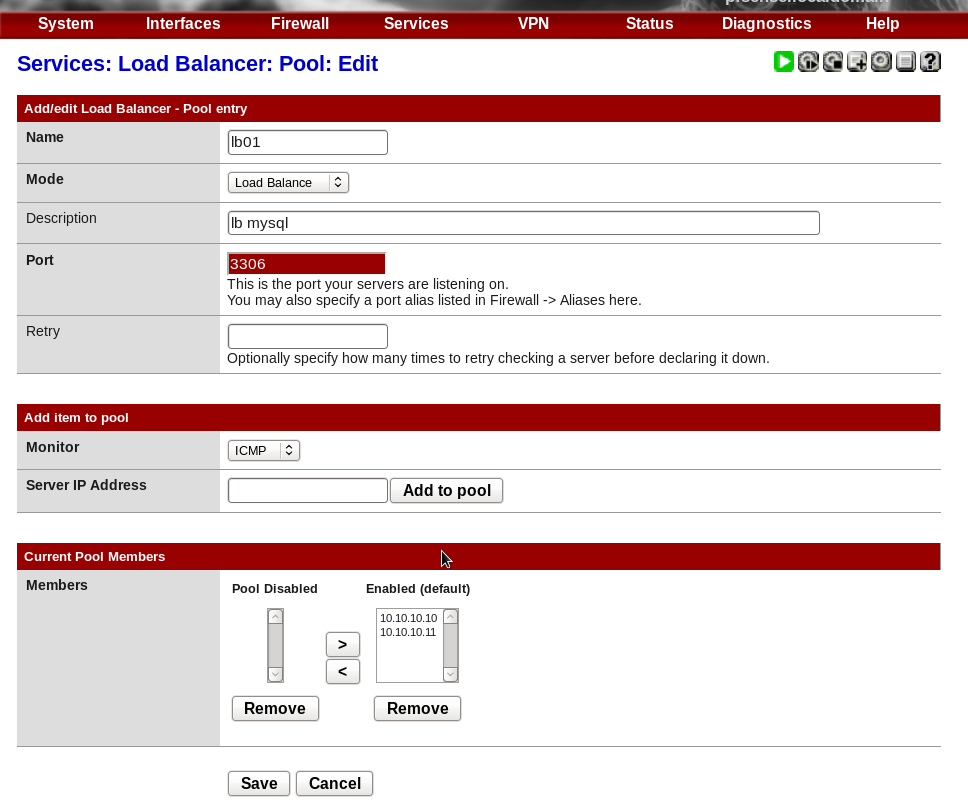

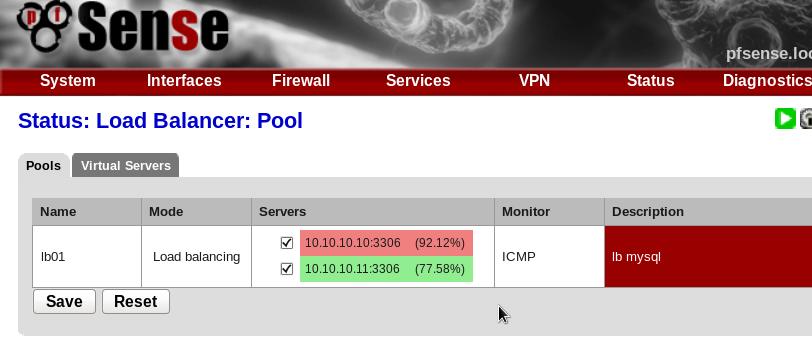

# Adding pool of resources for load balancer Services > Load Balancer > Pools >

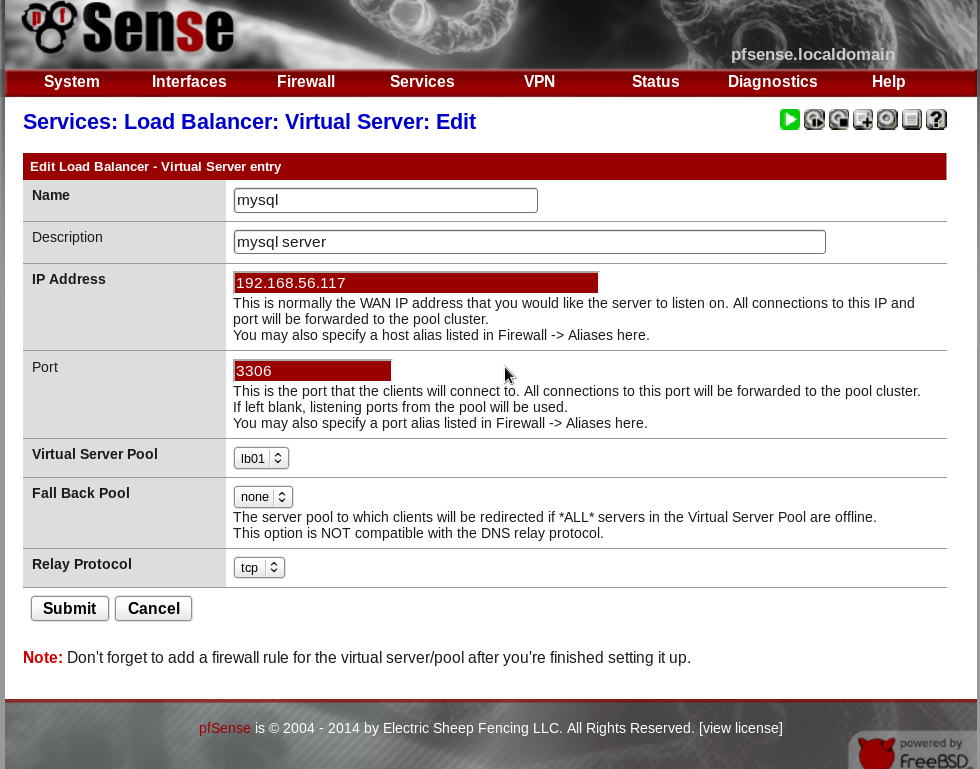

# Adding a Virtual Server for load balancer Services > Load Balancer > Virtual Servers > Services > Load Balancer > Settings > interval 3 sec

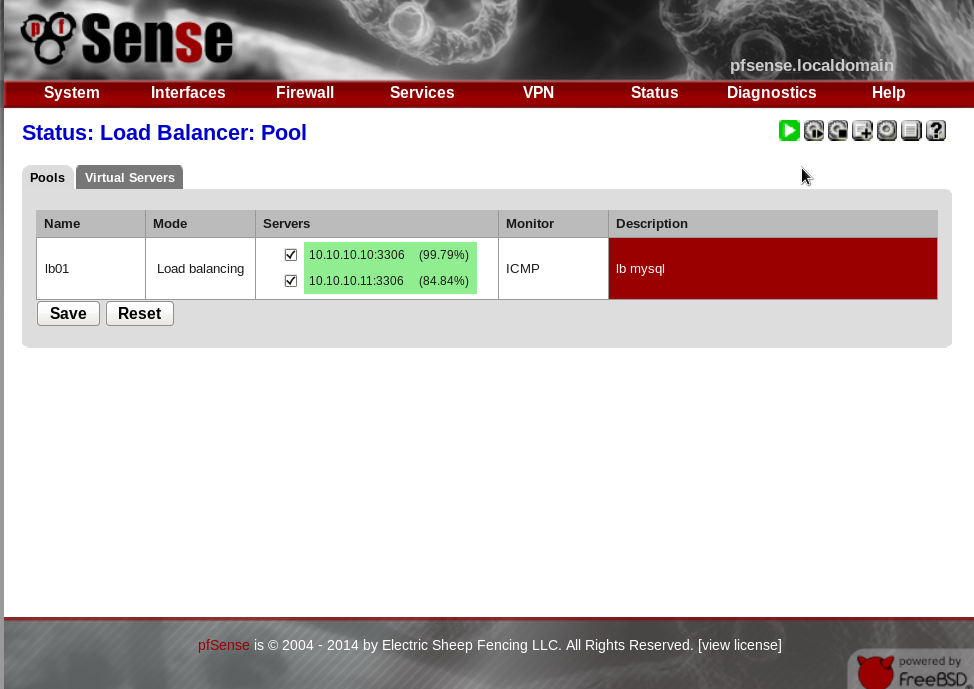

# Check status of load balancer Status > Load Balancer > Pools >

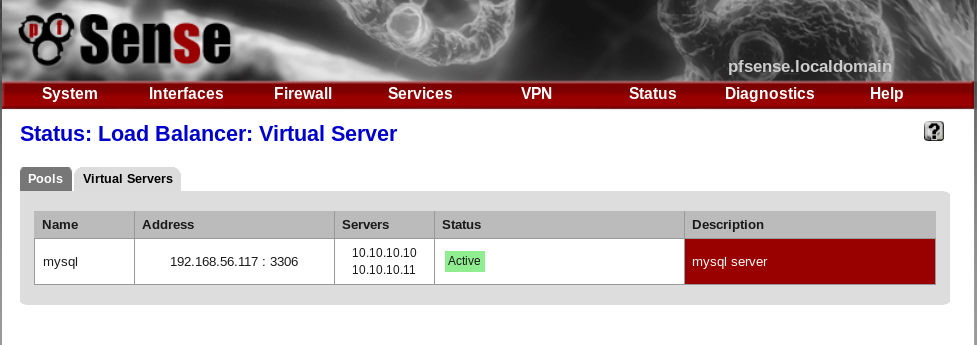

#Check status of virtual server Status > Load Balancer > Virtual Servers >

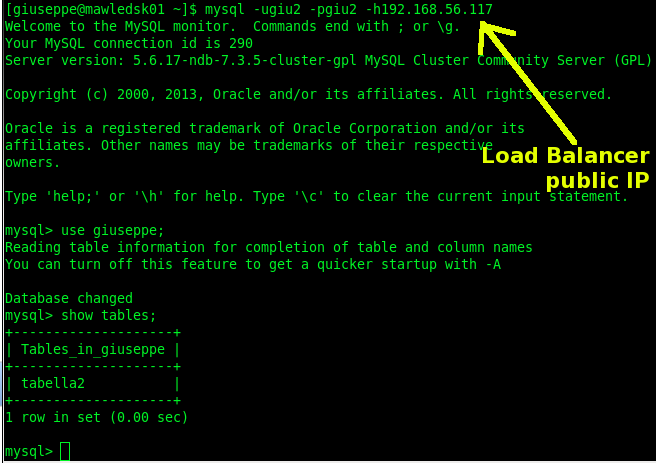

STEP 6. Test load Balancer

You can simulate a downtime event on the mgm01 or mgm02 machine. Open the Virtualbox control panel and put the mgm01 in “pause” mode. Now try to connect the mysql cluster using the pfSense WAN IP. If no errors occur, you can see the mysql client console shown below.

English

English Italian

Italian

Excellent work.Thanks for this nice post.

Thank you!

I want to share a collection of tutorial for quick use.

I hope can be useful.

Hi,

Thank you for the post,

One thing! when you get to the last part, when you want to connect via the public ip (wan) what credencial do you put, becouze when i put mysql -uroot -p -h192.168.X.X (the same that we put on the virtual server entry) i can´t it sais “Can´t connect to mysql server on 192.168.X.X”

Thank´s again

Hi,

in order to test a mysql connection through the public IP (wan) of the pfSense, you should open all inbound traffic for the wan and lan interfaces (check section: Firewall > Rules > pass WAN, LAN ).

Make sure that port 3306 on pfSense is open, you can run nmap or telnet to check it:

> telnet 192.168.56.117 3306

or

> nmap 192.168.56.117 | grep 3306

Giuseppe